Announcing the Matterport3D Research Dataset

At Matterport, we’ve seen firsthand the tremendous power that 3D data can have in several domains of deep learning. We’ve been doing research in this space for a while, and have wanted to release a fraction of our data for use by researchers. We’re excited that groups at Stanford, Princeton, and TUM have painstakingly hand-labeled a wide range of spaces offered up by customers and made these labeled spaces public in the form of the Matterport 3D dataset.

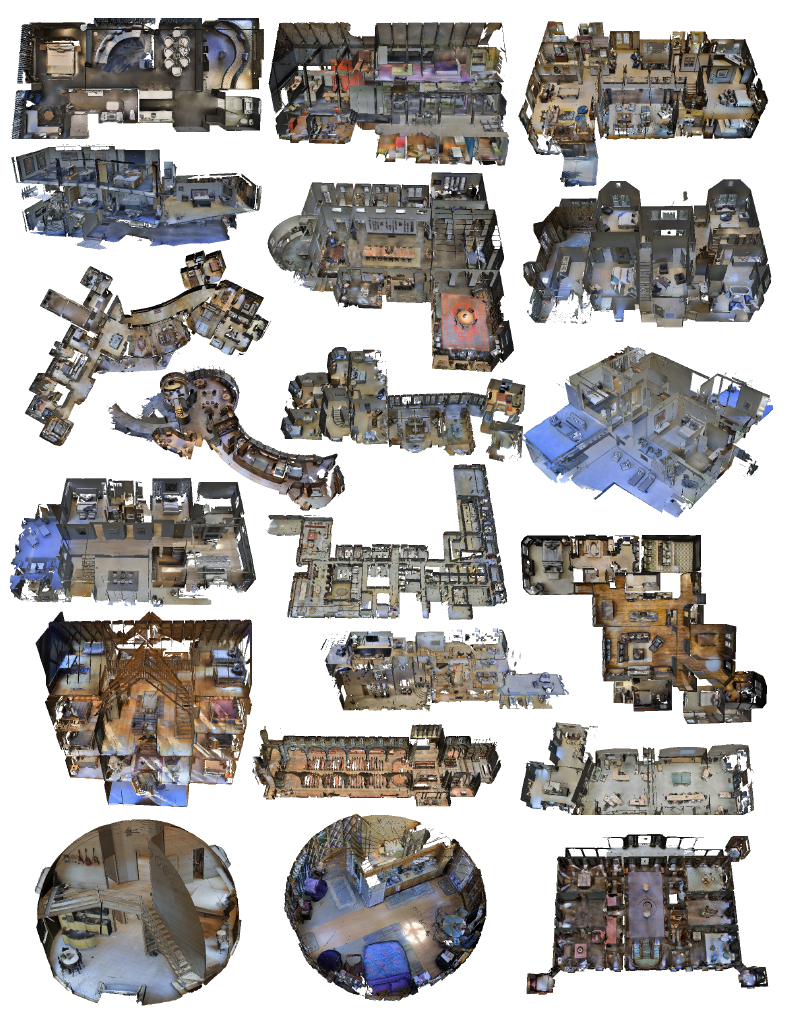

This is the largest public dataset of its kind in the world, and the labeling of this dataset was a very significant effort.

The presence of very large 2D datasets such as ImageNet and COCO was instrumental in the creation of highly accurate 2D image classification systems in the mid-2010s, and we expect that the availability of this labeled 3D+2D dataset will have a similarly large impact on improving AI systems’ ability to perceive, understand, and navigate the world in 3D. This has implications for everything from augmented reality to robotics to 3D reconstruction to better understanding of 2D images. You can access the dataset and sample code here and read the paper here.

What’s in the dataset?

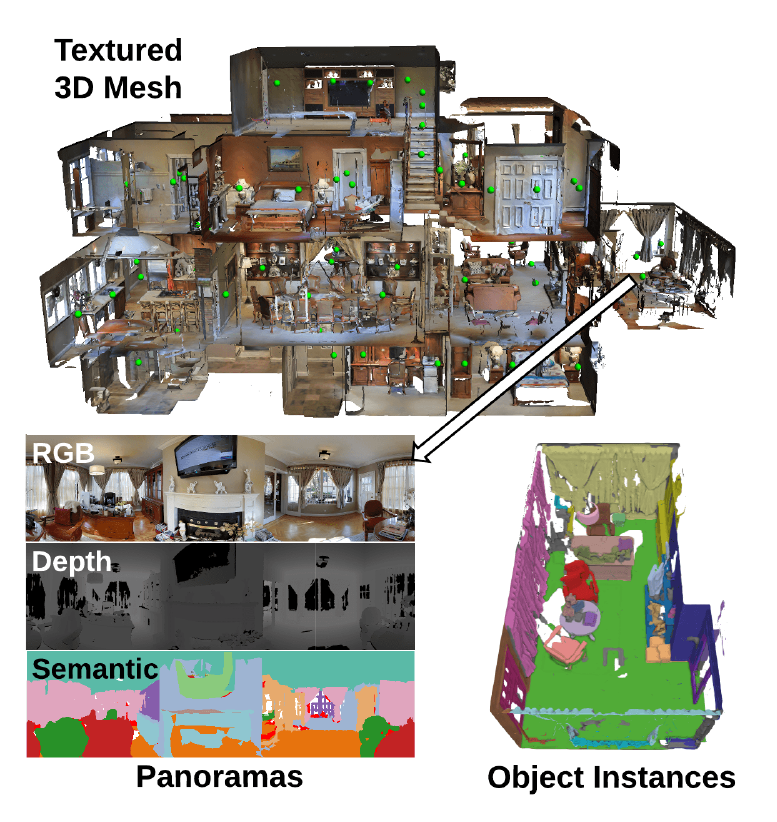

This dataset contains 10,800 aligned 3D panoramic views (RGB + depth per pixel) from 194,400 RGB + depth images of 90 building-scale scenes. All of these scenes were captured with Matterport’s Pro 3D Camera. The 3D models of the scenes have been hand-labeled with instance-level object segmentation. You can explore a wide range of Matterport 3D reconstructions interactively at https://matterport.com/gallery.

Why is 3D data important?

3D is key to our perception of the world as humans. It enables us to visually separate objects easily, quickly model the structure of our environment, and navigate effortlessly through cluttered spaces.

For researchers building systems to understand the content of images, having this 3D training dataset provides a vast amount of ground truth labels for the size and shape of the contents of images. It also provides multiple aligned views of the same objects and rooms, allowing researchers to look at the robustness of algorithms across changes in viewpoint.

For researchers building systems that are designed to interpret data from 3D sensors (e.g. augmented reality goggles, robots, phones with stereo or depth sensing, or 3D cameras like ours), having a range of real 3D spaces to train and test on makes the process of development easier.

What’s possible with this dataset?

Many things! I’m going to share a few of the areas of research Matterport is doing.

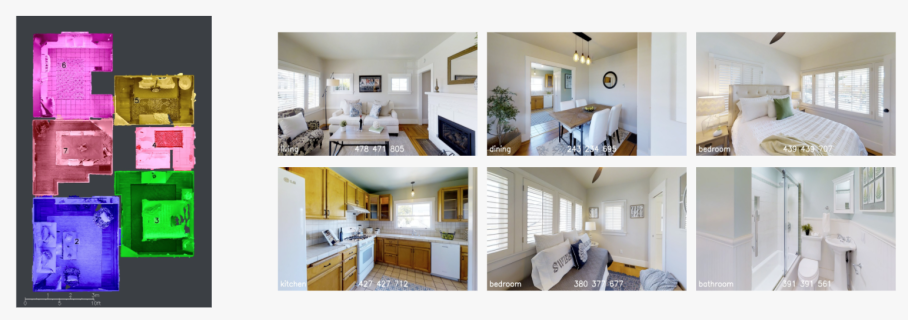

We’ve used it internally to build a system that segments spaces captured by our users into rooms and classifies each room. It’s even capable of handling situations in which two types of room (e.g. a kitchen and a dining room) share a common enclosure without a door or divider. In the future, this will help our customers skip the task of having to label rooms in their floor plan views.

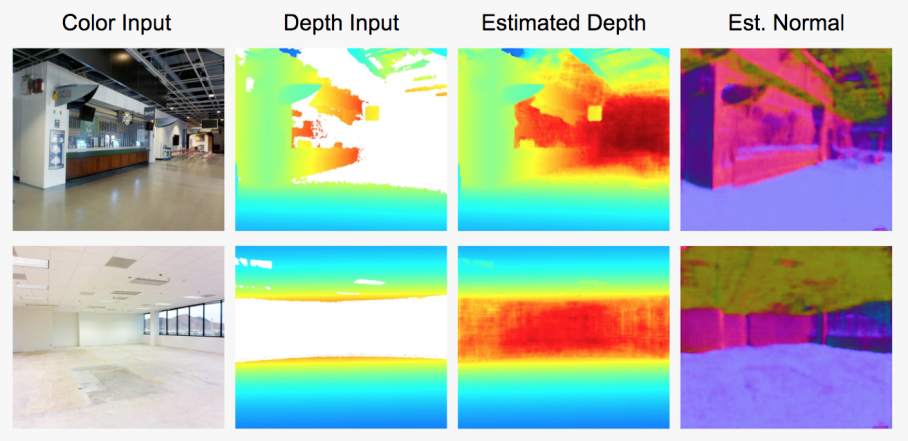

We’re also experimenting with using deep learning to fill in areas that are beyond the reach of our 3D sensor. This could enable our users to capture large open spaces such as warehouses, shopping malls, commercial real estate, and factories much more quickly as well enable as new types of spaces to be captured. Here’s a preliminary example in which our algorithms use color and partial depth to predict the depth values and surface orientations (normal vectors) for the areas that are too distant to be picked up by the depth sensor.

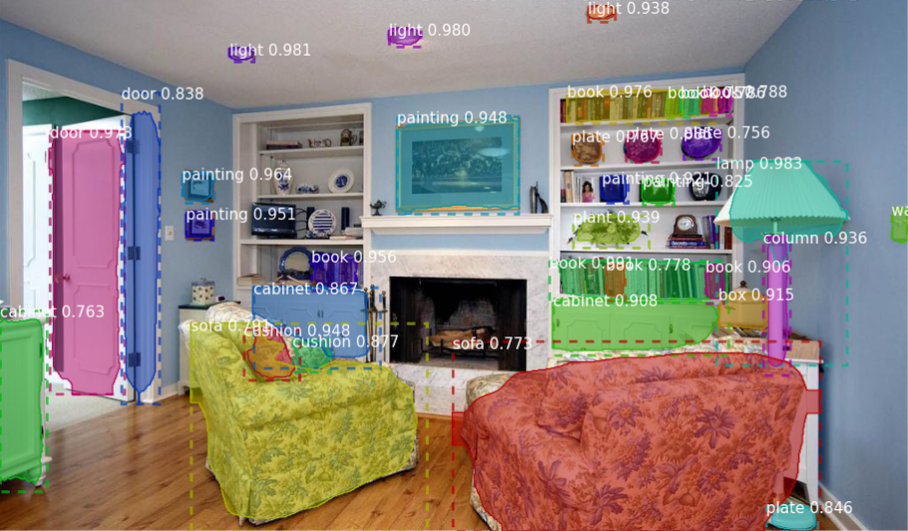

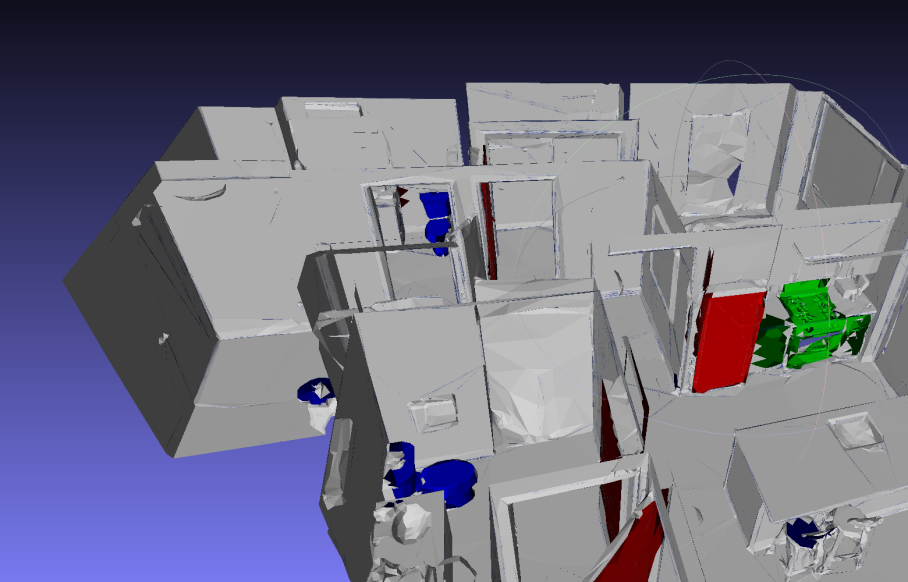

We’re also using it to start fully segmenting the spaces captured by our customers into objects. Unlike the 3D models we have now, these fully segmented models would let you precisely identify the contents of the space. This enables you to do a wide range of things, including automatically generating a detailed list of the contents and characteristics of a space and automatically seeing what the space would look like with different furniture.

Ultimately, we want to do for the real world what Google did for the web – enable any space to be indexed, searched, sorted, and understood, enabling you to find exactly what you’re looking for. Want to find a place to live that has three large bedrooms, a sleek modern kitchen, a balcony with a view of a pond, a living room with a built-in fireplace, and floor-to-ceiling windows? No problem! Want to inventory all the furniture in your office, or compare your construction site’s plumbing and HVAC installations against the CAD model? Also easy!

The paper also shows off a range of other use cases, including improved feature matching via deep learning-based features, surface normal vector estimation from 2D images, and identification of architectural features and objects in voxel-based models.

Why not use a synthetic 3D dataset?

Synthetic datasets are an exciting area of research and development, though they have limitations in terms of how well systems trained purely on a synthetic dataset work on real data. The tremendous variety of scene appearances in the real world is very difficult to simulate, and we’ve found synthetic datasets to be most useful as a first round of training before training on real data as opposed to the main training step.

What’s next?

We’re excited to hear what you all end up doing with this data! As noted above, you can access the data, code, and 3DV conference paper here and we are excited to partner with research institutions on a range of projects.

If you’re passionate about 3D and interested in an even bigger dataset, Matterport internally has roughly 7500x as much 3D data than is in this dataset, and we are hiring for a range of deep learning, SLAM, computational geometry, and other related computer vision positions.

We’d like to thank Matthias Niessner, Thomas Funkhouser, Angela Dai, Yinda Zhang, Angel Chang, Manolis Savva, Maciej Halber, Shuran Song, and Andy Zeng for their work in labeling this dataset and developing algorithms to run on it.

Matterport’s internal work in this area was made possible by Waleed Abdulla, Yinda Zhang, and Gunnar Hovden.

Enjoy the world made possible by these spaces! We certainly have!